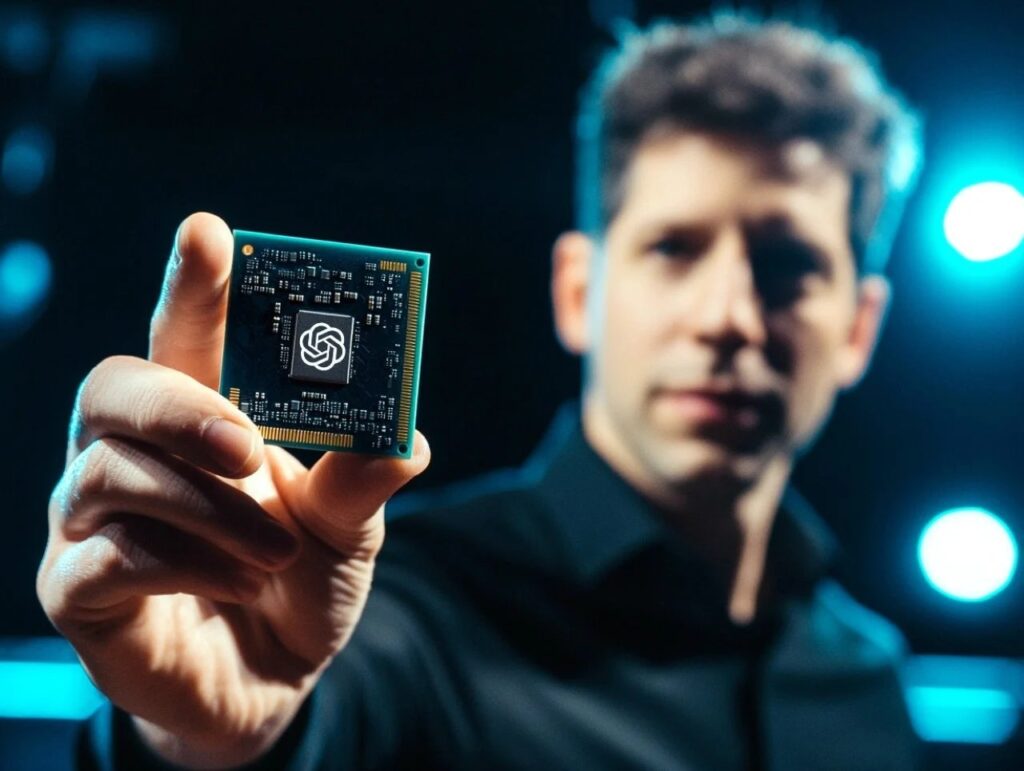

OpenAI is making a strategic shift by developing its own AI chip to meet the heavy demands of running advanced models, aiming to launch the chip by 2026. The company, known for ChatGPT, has partnered with Broadcom for design and TSMC for manufacturing, steering clear of the costly venture of building its own factory.

Why deviate from traditional suppliers? OpenAI is one of Nvidia’s major GPU buyers, but with Nvidia’s prices rising, the company wants to reduce reliance on a single supplier. The addition of AMD’s MI300X chips further diversifies its options, showcasing OpenAI’s strategy to avoid being overly dependent on Nvidia.

Broadcom is contributing to the chip’s design, ensuring smooth data transfer across many interconnected chips, while TSMC’s production expertise boosts OpenAI’s ability to scale up for increasing AI workloads. This approach shows OpenAI’s resourcefulness in meeting its infrastructure needs in a dynamic chip market.

OpenAI’s move into custom chips is more than just about cost-cutting or specs—it’s about full control over its technology. By designing chips tailored for inference (the phase where AI applies learned knowledge), OpenAI aims to optimize real-time processing for tools like ChatGPT. This isn’t just about efficiency; it’s a strategic play to establish itself as a major player in an arena where Google and Meta have already set the pace.

What’s clever is that OpenAI isn’t burning bridges with its existing suppliers. Even as it works on its custom chip, OpenAI remains aligned with Nvidia, ensuring access to the latest Blackwell GPUs. It’s a balancing act—keeping key partnerships intact while quietly preparing to stand more independently, a move that highlights OpenAI’s strategic finesse.